Sunday, 11 July 2010

What makes the LHC tick?

To start, look at the mostly circular cavern, 27 kilometers in circumference, that houses the accelerator. It's got an average depth of 100 meters, but in fact it's actually horizontal: its plane is tilted 1.4 percent to keep it as shallow as possible to minimize the expense of digging vertical shafts while placing the cavern in a subterranean sandstone layer.

Tidal forces from the moon cause the Earth's crust to rise about 25cm, an effect that increases the LHC's circumference by 1mm. That may not sound significant, but it must be factored into calculations.

The cavern itself is recycled from an earlier accelerator, the LEP (Large Electron-Positron) accelerator, to cut costs.

"You get the biggest tunnel you can afford. It's the one thing you can't change after the fact," said said Tom LeCompte, physics coordinator for one of the major LHC experiments, ATLAS. Bigger accelerators are desirable because the more the path of charged particles curves, the more energy they lose through what's called synchrotron radiation.

There's something of a tension between those who operate the LHC and those who are running its experiments. In this earlier stage of its real work, a lot of time is devoted to working out the kinks and understanding the LHC.

"It's a machine in its very youth," said Mirko Pojer, a physicist and the engineer in charge of LHC operations. "Most of the things are not routine."

To get protons and lead ions up to full speed, the particles travel through some of CERN's history as a center of nuclear physics research. Two previously state-of-the-art accelerators, the Proton Synchrotron that started operation in 1959, and the Super Proton Synchrotron that started in 1976, are now mere LHC stepping stones on the way to higher energies.

The LHC has two beams that circulate in opposite directions on separate paths. At four locations, the two beams collide, producing the showers of particles researchers study. The nature of the particles is inferred from characteristics such as how much energy they release when they are absorbed at the edges of the detector; other detectors track the particles as they travel, letting physicists gauge their properties by how much their path is curved by an electric field, for example.

One of the standout features that lets the LHC reach such tremendous energy is its collection of magnets, supercooled to 1.9 Kelvin, or -456.25 degrees Fahrenheit. At this temperature, the magnets and the cables that connect them are superconducting, meaning that electrical current can travel within them without losing energy.

A total of 1,232 magnetic dipoles are responsible for steering the beams around the LHC's curve, and separate devices called a radiofrequency cavity accelerate the ions and group them into bunches.

These bunches pass through each other like two swarms of very tiny bees, and sometimes the protons actually collide. So far, the LHC operators haven't filled the accelerator to capacity. Eventually each beam will consist of 2,808 bunches, each with 100 billion protons, spaced about 7 meters apart, traveling around the ring 11,245 times per second, and producing 600 million collisions per second.

Maxed out today, the LHC produces collisions of two protons with a total energy of 7 tera-electron volts, or TeV, but after a coming shutdown period to upgrade the LHC, it's planned to reach a total energy of 14TeV.

That's actually kind of a feeble amount of energy by one view--a flying mosquito has about 1TeV worth of energy. What makes it impressive is that energy is confined to the very tiny volume of a proton collision. To attain it, the LHC consumes about the same amount of electrical power as all Geneva.

Pojer was off duty on September 19, 2008, when the LHC hit its roughest patch. The LHC had done well just days earlier when researchers fired it up with its first beam. Pojer had just sat down to a congratulatory glass of champagne when a colleague called: "Run here, immediately. It's urgent."

It turns out a faulty electrical connection between two magnets had heated up, causing an explosive helium leak. The helium used to cool the LHC's magnets is so cold it's what's called a superfluid, and heating it up so it turns into a gas causes big problems with gas pressure. Some of the massive magnet structures of the accelerator shifted by a half a meter by the forces involved.

"Everybody was depressed and astonished," Pojer said of the problem. "Slowly we recovered," though, and a happier day came on March 30, 2010, when he was the engineer in charge of ramping the LHC up to 3.5TeV energy level.

"It was amazing and exciting," Pojer said, and indeed there was jubilation among the crews and researchers. Now, when the engineers in charge running LHC aren't working on refinements such as a tighter beam that increases the chances of collisions, the LHC is used for its scientific mission.

The LHC's hardware is largely located underground, but on the surface are several control rooms, each festooned with large monitors, for overseeing the operations and the experiments. But a significant part of the LHC's effective operation actually takes place far from CERN.

That's because a worldwide grid of computers is used to process the data that actually comes from the experiments. CERN maintains a primary copy of the data produced, but several tier-one partners have copies of parts, and about 130 to 150 tier-two partners keep their own copies many scientists actually use in their research.

That's not to say CERN's computers aren't central. A data center with hundreds of machines churns night and day to process the data. "It never stops, not even at Christmas," said Ian Bird, worldwide LHC computing grid project leader, with a million computing jobs in the center in flight at any given moment.

That's because LHC experiments don't lack for data. "Experiments have a knob," a way to adjust how much data is captured. Collectively, the LHC experiments produce about 15 petabytes of raw data each year that must be stored, processed, and analyzed.

Data processing is needed to sift the interesting unusual events out from the sea of background noise, to find enough of them to prove they aren't flukes, and to offer precise measurements. There was a day when individual events at particle accelerators were observed in bubble chambers or recorded on film, but computers are an essential component in the LHC's scientific investigations.

http://news.cnet.com/8301-30685_3-20009671-264.html

The Incredible Shrinking Proton That Could Rattle the Physics World

But the big story this week in Nature is that we might have been wrong all along in estimating something very basic about the humble proton: its size. A team from the Paul-Scherrer Institute in Switzerland that’s been tackling this for a decade says its arduous measurements of the proton show it is 4 percent smaller than the previous best estimate. For something as simple as the size of a proton, one of the basic measurements upon with the standard model of particle physics is built, 4 percent is a vast expanse that could shake up quantum electrodynamics if it’s true.

If the [standard model] turns out to be wrong, “it would be quite revolutionary. It would mean that we know a lot less than we thought we knew,” said physicist Peter J. Mohr of the National Institute of Standards and Technology in Gaithersburg, Md., who was not involved in the research. “If it is a fundamental problem, we don’t know what the consequences are yet” [Los Angeles Times].

Simply, the long-standing value used for a proton’s radius is 0.8768 femtometers, (a femtometer equals one quadrillionth of a meter). But the study team found it to be 0.84184 femtometers. How’d they make their measurement? First, think of the standard picture of electrons orbiting around a proton:

According to quantum mechanics, an electron can orbit only at certain specific distances, called energy levels, from its proton. The electron can jump up to a higher energy level if a particle of light hits it, or drop down to a lower one if it lets some light go. Physicists measure the energy of the absorbed or released light to determine how far one energy level is from another, and use calculations based on quantum electrodynamics to transform that energy difference into a number for the size of the proton [Wired.com].

That was how physicists derived their previous estimate, using simple hydrogen atoms. But this team relied on muons instead of electrons. Muons are 200 times heavier than electrons; they orbit closer to protons and are more sensitive to the proton’s size. However, they don’t last long and there aren’t many of them, so the team had to be quick:

The team knew that firing a laser at the atom before the muon decays should excite the muon, causing it to move to a higher energy level—a higher orbit around the proton. The muon should then release the extra energy as x-rays and move to a lower energy level. The distance between these energy levels is determined by the size of the proton, which in turn dictates the frequency of the emitted x-rays [National Geographic].

Thus, they should have seen the specific frequency related to the accepted size of a proton. Just one problem: The scientists didn’t see that frequency. Instead, their x-ray readings corresponded to the 4-percent-smaller size.

Now the task at hand is to check whether this study is somehow flawed, or is in fact a finding that will shake up physics.

In an editorial accompanying the report in the journal Nature, physicist Jeff Flowers of the National Physical Laboratory in Teddington, England, said there were three possibilities: Either the experimenters have made a mistake, the calculations used in determining the size of the proton are wrong or, potentially most exciting and disturbing, the standard model has some kind of problem [Los Angeles Times].

Black Holes - What Are Black Holes and How Do They Form?

What Is a Black Hole?

Simply put, a black hole is a region of space that is so incredibly dense that not even light can escape from the surface. However, it is this fact that often leads to miss-understanding. Black holes, strictly speaking, don't have any greater gravitational reach than any other star of the same mass. If our Sun suddenly became a black hole of the same mass the rest of the objects, including Earth, would be unaffected gravitationally. The Earth would remain in its current orbit, as would the rest of the planets. (Of course other things would be affected, such as the amount of light and heat that Earth received. So we would still be in trouble, but we wouldn't get sucked into the black hole.)

There is a region of space surrounding the black hole from where light can not escape, hence the name. The boundary of this region is known as the event horizon, and it is defined as the point where the escape velocity from the gravitational field is equal to the speed of light. The calculation of the radial distance to this boundary can become quite complicated when the black hole is rotating and/or is charged.

For the simplest case (a non-rotating, charge neutral black hole), the entire mass of the black hole would be contained within the event horizon (a necessary requirement for all black holes). The event horizon radius (Rs) would then be defined as Rs = 2GM/c2.

How Do Black Holes Form?

This is actually somewhat of a complex question, namely because there are different types of black holes. The most common type of black holes are known as stellar mass black holes as they are roughly up to a few times the mass of our Sun. These types of black holes are formed when large main sequence stars (10 - 15 times the mass of our Sun) run out of nuclear fuel in their cores. The result is a massive supernova explosion, leaving a black hole core behind where the star once existed.

The two other types of black holes are supermassive black holes -- black holes with masses millions or billions times the mass of the Sun -- and micro black holes -- black holes with extremely small masses, perhaps as small as 20 micrograms. In both cases the mechanisms for their creation is not entirely clear. Micro black holes exist in theory, but have not been directly detected. While supermassive black holes are found to exist in the cores of most galaxies.

While it is possible that supermassive black holes result from the merger of smaller, stellar mass black holes and other matter, it is possible that they form from the collapse of a single, extremely high mass star. However, no such star has ever been observed.

Meanwhile, micro black holes would be created during the collision of two very high energy particles. It is thought that this happens continuously in the upper atmosphere of Earth, and is likely to happen in particle physics experiments such as CERN. But no need to worry, we are not in danger.

How Do We Know Black Holes Exist If We Can't "See" Them?

Since light can not escape from the region around a black hole bound by the event horizon, it is not possible to directly "see" a black hole. However, it is possible to observe these objects by their effect on their surroundings.

Black holes that are near other objects will have a gravitational effect on them. Going back to the earlier example, suppose that our Sun became a black hole of one solar mass. An alien observer somewhere else in the galaxy studying our solar system would see the planets, comets and asteroids orbiting a central point. They would deduce that the planets and other objects were bound in their orbits by a one solar mass object. Since they would see no such star, the object would correctly be identified as a black hole.

Another way that we observe black holes is by utilizing another property of black holes, specifically that they, like all massive objects, will cause light to bend -- due to the intense gravity -- as it passes by. As stars behind the black hole move relative to it, the light emitted by them will appear distorted, or the stars will appear to move in an unusual way. From this information the position and mass of the black hole can be determined.

There is another type of black hole system, known as a microquasar. These dynamic objects consist of a stellar mass black hole in a binary system with another star, usually a large main sequence star. Due to the immense gravity of the black hole, matter from the companion star is funneled off onto a disk surrounding the black hole. This material then heats up as it begins to fall into the black hole through a process called accretion. The result is the creation of X-rays that we can detect using telescopes orbiting the Earth.

Hawking Radiation

The final way that we could possibly detect a black hole is through a mechanism known as Hawking radiation. Named for the famed theoretical physicist and cosmologist Stephen Hawking, Hawking radiation is a consequence of thermodynamics that requires that energy escape from a black hole.

The basic (perhaps oversimplified) idea is that, due to natural interactions and fluctuations in the vacuum (the very fabric of space time if you will), matter will be created in the form of an electron and anti-electron (called a positron). When this occurs near the event horizon, one particle will be ejected away from the black hole, while the other will fall into the gravitational well.

To an observer, all that is "seen" is a particle being emitted from the black hole. The particle would be seen as having positive energy. Meaning, by symmetry, that the particle that fell into the black hole would have negative energy. The result is that as a black hole ages it looses energy, and therefore loses mass (by Einstein's famous equation, E=Mc2).

Ultimately, it is found that black holes will eventually completely decay unless more mass is accreted. And it is this same phenomenon that is responsible for the short lifetimes expected by micro black holes.

Extra Dimensions Ten Billion Times Smaller Than An Atom

What is the form of the universe?

Physicists created the Standard Model to explain the form of the universe—the fundamental particles, their properties, and the forces that govern them. The predictions of this tried-and-true model have repeatedly proven accurate over the years. However, there are still questions left unanswered. For this reason, physicists have theorized many possible extensions to the Standard Model. Several of these predict that at higher collision energies, like those at the LHC, we will encounter new particles like the Z', pronounced " Z prime." It is a theoretical heavy boson whose discovery could be useful in developing new physics models. Depending on when and how we find a Z' boson, we will be able to draw more conclusions about the models it supports, whether they involve superstrings, extra dimensions, or a grand unified theory that explains everything in the universe. Whatever physicists discover beyond the Standard Model will open new frontiers for exploring the nature of the universe.

What is the universe made of?

Since the 1930s, scientists have been aware that the universe contains more than just regular matter. In fact, only a little over 4 percent of the universe is made of the matter that we can see. Of the remaining 96 percent, about 23 percent is dark matter and everything else is dark energy, a mysterious substance that creates a gravitational repulsion responsible for the universe’s accelerating expansion. One theory regarding dark matter is that it is made up of the as-yet-unseen partners of the particles that make up regular matter. In a supersymmetric universe, every ordinary particle has one of these superpartners. Experiments at the LHC may find evidence to support or reject their existence.

Are there extra dimensions?

We experience three dimensions of space. However, the theory of relativity states that space can expand, contract, and bend. It’s possible, therefore, that we encounter only three spatial dimensions because they’re the only ones our size enables us to see, while other dimensions are so tiny that they are effectively hidden. Extra dimensions are integral to several theoretical models of the universe; string theory, for example, suggests as many as seven extra dimensions of space. The LHC is sensitive enough to detect extra dimensions ten billion times smaller than an atom. Experiments like ATLAS and CMS are hoping to gather information about how many other dimensions exist, what particles are associated with them, and how they are hidden.

(Editor's note: the most powerful microscopes now in existence can see into the upper picometer range (trillionths of a meter; nanotechnology is measured in nanometers or billionths of a meter about the size of a hydrogen atom.)

What are the most basic building blocks of matter?

Particle physicists hope to explain the makeup of the universe by understanding it from its smallest, most basic parts. Today, the fundamental building blocks of the universe are believed to be quarks and leptons; however, some theorists believe that these particles are not fundamental after all. The theory of compositeness, for example, suggests that quarks are composed of even smaller particles. Efforts to look closely at quarks and leptons have been difficult. Quarks are especially challenging, as they are never found in isolation but instead join with other particles to form hadrons, such as the protons that collide in the LHC. With the LHC’s high energy levels, scientists hope to collect enough data about quarks to reveal whether anything smaller is hidden inside.

Why do some particles have mass?

Through the theory of relativity, we know that particles moving at the speed of light have no mass, while particles moving slower than light speed do have mass. Physicists theorize that the omnipresent Higgs field slows some particles to below light speed, and thus imbues them with mass. We can’t study the Higgs field directly, but it is possible that an accelerator could excite this field enough to "shake loose" Higgs boson particles, which physicists should be able to detect. After decades of searching, physicists believe that they are close to producing collisions at the energy level needed to detect Higgs bosons.

Sunday, 25 April 2010

Dark matter may give neutron stars black hearts

DARK matter may be prompting black holes to appear spontaneously in the hearts of distant exotic stars. If so, this could hint at the nature of dark matter.

Arnaud de Lavallaz and Malcolm Fairbairn of King's College London wondered what would happen when dark matter - which makes up most of the mass of galaxies - is sucked into the heart of neutron stars. These stars, the remnants of supernova explosions, are the densest known stars in the universe. It turns out that the outcome depends on the nature of dark matter.

Most of the favoured theories of dark matter suggest each particle of the stuff is also an antiparticle, meaning that they should annihilate each other when they meet. But Fairbairn and de Lavallaz considered a dark matter particle of a different type, which is not also its antiparticle.

The pair calculated what would happen if dark matter particles like these were attracted by the intense gravity of neutron stars. Because they would not annihilate each other, the dark matter particles would end up forming a smaller, dense star at the heart of the neutron star. If the neutron star were near the centre of the galaxy, for example, and surrounded by an abundance of dark matter, then it would continue to accrete dark matter.

Eventually, the mass of the dark matter star would exceed its "Chandrasekhar limit" - beyond which a star cannot withstand gravitational pressure. The dark matter star would collapse into a black hole. "Then the neutron star won't be able to survive anymore, and it'll collapse too," says Fairbairn. "It would be pretty catastrophic."

Their calculations show that if a neutron star collapsed in this way the result would be a burst of gamma rays, which could be spotted from Earth (arxiv.org/abs/1004.0629).

Various underground experiments back on Earth have been trying to detect dark matter, using different techniques. While none of the major experiments have seen anything yet, physicists running the Dark Matter (DAMA) experiment inside the Gran Sasso mountain in Italy have been saying for some time that dark matter particles are hitting their detector. Most physicists are sceptical of the DAMA results because it doesn't sit well with favoured theories on the nature of dark matter.

Fairbairn says that the DAMA experiment could be sensitive to dark matter particles that do not self-annihilate, which might explain why it is seeing something and others are not.

Dan Hooper of Fermilab in Batavia, Illinois, agrees the pair's scenario is plausible because the existence of dark matter particles that do not self-annihilate cannot be ruled out. "We could look for evidence that neutron stars don't live very long in regions with a lot of dark matter," he says. "I find exciting the prospect of using exotic stars as dark matter detectors."

http://www.newscientist.com/article/mg20627564.900-dark-matter-may-give-neutron-stars-black-hearts.html?DCMP=OTC-rss&nsref=physics-math

Physicists find a particle accelerator in the sky

The first evidence that thunderstorms can function as huge natural particle accelerators has been collected by an international team of researchers.

In a presentation at a meeting of the Royal Astronomical Society in Glasgow last week, Martin Füllekrug of Bath University described how the team detected radio waves coinciding with the appearance of "sprites" – glowing orbs that occasionally flicker into existence above thunderstorms. The radio waves suggest the sprites can accelerate nearby electrons, creating a beam with the same power as a small nuclear power plant.

"The discovery of the particle accelerator allows [one] to apply the knowledge gained in particle physics to the real world, and put the expected consequences to experimental testing," Füllekrug told physicsworld.com.

An old idea

The idea of natural particle accelerators existing just kilometres above our heads first came in 1925, when the UK physicist and Nobel laureate Charles Wilson investigated the effects of a thundercloud's electric field. Wilson claimed that the electric field would cause an electrical breakdown of the Earth's atmosphere above the cloud, leading to transient phenomena such as sprites.

These sprites, physicists suggested, would do more than just light up the sky. As highly energetic particles or "cosmic rays" from space bombard our atmosphere, they strip air molecules of their outer electrons. In the presence of a sprite's electric field, these electrons could be forced upward in a narrow beam from the troposphere to near-Earth space. Moreover, the changing electron current would, via Maxwell's equations, produce electromagnetic waves in the radio-frequency range.

In 1998 Füllekrug's colleague Robert Roussel-Dupré of Los Alamos National Laboratory in New Mexico, US, used a supercomputer to simulate these radio waves. The simulations predicted they would come in pulses with a fairly flat spectrum – contrary to the electromagnetic spectrum of the lightning itself, which increases at lower frequencies.

Predictions confirmed

In 2008, while a group of European scientists timed the arrival of sprites from a mountain top in the French Pyrenées, Füllekrug was on the ground with a purpose-built radio-wave detector. The signals he detected coincided with the sprites and matched the characteristics of Roussel-Dupré's predictions.

"It's intriguing to see that nature creates particle accelerators just a few miles above our heads," says Füllekrug, adding: "They provide a fascinating example of the interaction between the Earth and the wider universe."

Füllekrug notes that he has no particular applications in mind for a sky-based particle accelerator, although he believes there may be wider implications for science. Researchers have many questions about the middle atmosphere because it is so difficult to set up observational platforms there. But by employing what physicists have learned about how such electron beams interact with matter, researchers could use this phenomenon to study this part of the atmosphere.

Indeed, we might be hearing a lot more about natural particle accelerators in the near future. The IBUKI satellite from Japan could soon be looking at the movement of charged particles in the atmosphere. In the next few years several missions – including CHIBIS from Russia and TARANIS from France – should provide more data about these accelerators.

http://physicsworld.com/cws/article/news/42368

Dwarf planets are not space potatoes

In 2006 there was an outcry from many astronomers when Pluto was stripped of its planetary status and renamed as a dwarf planet. The aggrieved feel that the distinction is rather arbitrary, especially as it is difficult to distinguish dwarf planets from other bodies in the solar system. Now, however, a pair of researchers are offering a more rigid definition by calculating the lower limit on the size of dwarf planets for the first time.

The IAU's definition of a planet is a celestial body that meets three strict criteria. First, it must be in orbit around the Sun. Second, it must have sufficient mass that its self-gravity overcomes other forces in the rigid body so that it assumes a nearly round shape. Finally, it must also have cleared the neighbourhood around its orbit by drawing in other space material with its gravitational field.

A dwarf planet meets all of these criteria except the last. Indeed, this was the downfall of Pluto, whose orbital path overlaps with other objects such as asteroids and the planet Neptune.

This categorization, however, does not sit happily with many astronomers who point out that Neptune also fails the test because of its overlap with Pluto. Furthermore, there is no agreement on how small dwarf planets can be, making it difficult to estimate the number of dwarf planets in the solar system.

Potato radius

In this latest research, Charles Lineweaver and Marc Norman at the Australian National University address this issue by deriving from first principles a lower limit on the radius of protoplanets. They calculate, using their new equation, that asteroids must have a radius of at least 300 km and icy moons must have a radius above 200 km for self-gravity to dominate and create spherical bodies. Below this radius, a balance between gravitational and electronic forces can create all sorts of shapes referred to as rounded potatoes.

The new categorization increases the number of bodies orbiting beyond those that should now be classified as "dwarf planets". Previously, astronomers had known the size of a lot of these bodies, but not whether they were spherical. "Measuring the shape of objects as a function of size can help us determine how hot these objects were when their shapes were set early in their formation," says Lineweaver.

Decaying beauty spied for first time by LHC

The LHC started work on 30 March, and one of its four large detectors detected evidence of a beauty quark – also, less poetically, known as a bottom quark – on 5 April.

The find should be the first of many beauty decays that LHCb, the LHC's beauty experiment, will observe, and demonstrates the detector is working as planned.

This first recorded particle is a meson composed of an anti-beauty quark – the beauty quark's antiparticle – and an up quark – one of the two common quarks that make up protons and neutrons. While up quarks last for billions of years, the large beauty quarks swiftly decay into lower-energy particles in about 1.5 x 10-12 seconds.

After travelling only 2 millimetres in the accelerator, the beauty quark decayed to a lighter quark – still paired with the original up quark – and the extra energy was carried off in the form of electron-like particles called muons.

Extremely rare

"It's a very rare event – it's like a needle in a haystack," says Andreas Schopper, a spokesperson for LHCb. "In these 10 million data or so we find this one event."

The particle was detected by LHCb's automatic trigger system, which is designed to recognise unusual events or particles but to ignore the vast majority of proton collisions. Less than 1 per cent of the collisions will be of interest to LHCb scientists.

Once an event is recorded, the details of detector signals are sent out to computing centres on five continents, where over a few days software reconstructs particle tracks. "It is the teamwork of the collaboration," says Schopper. "This is the first time we have really detected and reconstructed such a big particle."

Back to the big bang

LHCb will look at many such decays in order to shed light on what happened to the antimatter that should have been created alongside the matter that makes up our universe.

The experiment is designed to examine what happens to beauty quarks, which form in high-energy explosions – not least the big bang. By comparing the decay products of beauty quarks, LHC researchers hope to find clues as to why our universe seems to favour matter over antimatter.

"While precision measurements will need many millions of beauty particles, as with kisses, the first is always very special," says Jürgen Schukraft, spokesperson for the LHC's ALICE experiment. "It shows that the detector is up to its task, performing very well in identifying the complex decay pattern."

Sunday, 18 April 2010

Einstein's General Relativity Tested Again, Much More Stringently

This time it was the gravitational redshift part of General Relativity; and the stringency? An astonishing better-than-one-part-in-100-million!

How did Steven Chu (US Secretary of Energy, though this work was done while he was at the University of California Berkeley), Holger Müler (Berkeley), and Achim Peters (Humboldt University in Berlin) beat the previous best gravitational redshift test (in 1976, using two atomic clocks – one on the surface of the Earth and the other sent up to an altitude of 10,000 km in a rocket) by a staggering 10,000 times?

By exploited wave-particle duality and superposition within an atom interferometer!

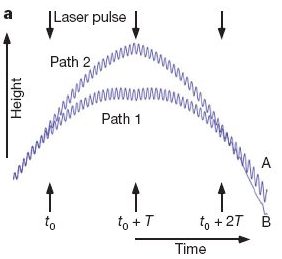

About this figure: Schematic of how the atom interferometer operates. The trajectories of the two atoms are plotted as functions of time. The atoms are accelerating due to gravity and the oscillatory lines depict the phase accumulation of the matter waves. Arrows indicate the times of the three laser pulses. (Courtesy: Nature).

Gravitational redshift is an inevitable consequence of the equivalence principle that underlies general relativity. The equivalence principle states that the local effects of gravity are the same as those of being in an accelerated frame of reference. So the downward force felt by someone in a lift could be equally due to an upward acceleration of the lift or to gravity. Pulses of light sent upwards from a clock on the lift floor will be redshifted when the lift is accelerating upwards, meaning that this clock will appear to tick more slowly when its flashes are compared at the ceiling of the lift to another clock. Because there is no way to tell gravity and acceleration apart, the same will hold true in a gravitational field; in other words the greater the gravitational pull experienced by a clock, or the closer it is to a massive body, the more slowly it will tick.

Confirmation of this effect supports the idea that gravity is geometry – a manifestation of spacetime curvature – because the flow of time is no longer constant throughout the universe but varies according to the distribution of massive bodies. Exploring the idea of spacetime curvature is important when distinguishing between different theories of quantum gravity because there are some versions of string theory in which matter can respond to something other than the geometry of spacetime.

Gravitational redshift, however, as a manifestation of local position invariance (the idea that the outcome of any non-gravitational experiment is independent of where and when in the universe it is carried out) is the least well confirmed of the three types of experiment that support the equivalence principle. The other two – the universality of freefall and local Lorentz invariance – have been verified with precisions of 10-13 or better, whereas gravitational redshift had previously been confirmed only to a precision of 7×10-5.

In 1997 Peters used laser trapping techniques developed by Chu to capture cesium atoms and cool them to a few millionths of a degree K (in order to reduce their velocity as much as possible), and then used a vertical laser beam to impart an upward kick to the atoms in order to measure gravitational freefall.

Now, Chu and Müller have re-interpreted the results of that experiment to give a measurement of the gravitational redshift.

In the experiment each of the atoms was exposed to three laser pulses. The first pulse placed the atom into a superposition of two equally probable states – either leaving it alone to decelerate and then fall back down to Earth under gravity's pull, or giving it an extra kick so that it reached a greater height before descending. A second pulse was then applied at just the right moment so as to push the atom in the second state back faster toward Earth, causing the two superposition states to meet on the way down. At this point the third pulse measured the interference between these two states brought about by the atom's existence as a wave, the idea being that any difference in gravitational redshift as experienced by the two states existing at difference heights above the Earth's surface would be manifest as a change in the relative phase of the two states.

The virtue of this approach is the extremely high frequency of a cesium atom's de Broglie wave – some 3×1025Hz. Although during the 0.3 s of freefall the matter waves on the higher trajectory experienced an elapsed time of just 2×10-20s more than the waves on the lower trajectory did, the enormous frequency of their oscillation, combined with the ability to measure amplitude differences of just one part in 1000, meant that the researchers were able to confirm gravitational redshift to a precision of 7×10-9.

As Müller puts it, "If the time of freefall was extended to the age of the universe – 14 billion years – the time difference between the upper and lower routes would be a mere one thousandth of a second, and the accuracy of the measurement would be 60 ps, the time it takes for light to travel about a centimetre."

Müller hopes to further improve the precision of the redshift measurements by increasing the distance between the two superposition states of the cesium atoms. The distance achieved in the current research was a mere 0.1 mm, but, he says, by increasing this to 1 m it should be possible to detect gravitational waves, predicted by general relativity but not yet directly observed.

More Evidence That Size Doesn’t Matter

Astronomers have managed to peer past obscuring dust clouds to gain their first peek at the gestation of a massive proto-star W33A which is about 12,000 light years away within the Sagittarius constellation. A spokesperson for the research team, who you may not be surprised to learn is British, described the sight as ‘reassuringly familiar, like a nice cup of tea’.

There has been a standing debate in astronomical circles about whether or not massive stars form in the same way as smaller stars. The issue has been hampered by a lack of observational data on just how massive stars form – as they develop so quickly they are generally only seen in an already fully formed state when they pop out of the obscuring dust clouds of their stellar nursery.

Known as a Massive Young Stellar Object (MYSO), W33A is estimated to be at least 10 solar masses and still growing. Shrouded in dust clouds it cannot be observed in visible light, but much of its infrared radiation passes through those ‘natal’ dust clouds. A research team led by Ben Davies from the University of Leeds collected this light using a combination of adaptive optics and the Near Infrared Integral Field Spectrograph (NIFS), on the Gemini North telescope in Hawaii.

The research team was able to piece together an image of a growing star within an accretion disk – surrounded by a wider torus (like a donut) of gas and dust. There were also clear indications of jets of material being blasted away from the poles of W33A at speeds of 300 kilometres a second. These are all common features that can be observed in the formation of smaller stars.

This adds to other recent findings about the formation of massive stars – including the Subaru Observatory’s direct imaging of a circumstellar disk around the MYSO called HD200775 reported in November 2009 and evidence of rapid formation of planets around massive stars in the W5 stellar nursery, reported by other researchers to the American Astronomical Society in January 2010.

These findings support the view that massive star formation occurs in much the same way as we see in smaller stars, where a centre of mass sucks up material from a surrounding gas cloud and the falling material collects into a spinning, circumstellar accretion disk – often accompanied by polar jets of material flung out by powerful electromagnetic forces within the growing star.

However, at least one clear distinction is apparent between small and massive star formation. The shorter wavelength, high energy radiation of newborn massive stars seems to dissipate the remains of their circumstellar disk more quickly than in smaller stars. This suggests that planet formation is less likely to occur around massive stars, although evidently some of them still manage it.

When black holes go rogue, they kill galaxies

Massive black holes may be kicking the life out of galaxies by ripping out their vital gaseous essence, leaving reddened galactic victims scattered throughout the universe. While the case is not yet closed, new research shows that these black holes have at least the means to commit the violent crime.

It was already known that "supermassive" black holes at the centre of most galaxies sometimes emit vast amounts of radiation. But nobody had a good idea how common such violence is. A snapshot of the universe doesn't give enough information to judge this because the activity of the black holes is thought to be intermittent, depending on how much nearby matter they have to feed on.

Now a team of astronomers have compiled a chronicle of activity going back deep into cosmic history, using the orbiting Chandra telescope to spot X-rays emitted by the black holes together with images from Hubble to look at their host galaxies. Previous surveys with less sensitive instruments were unable to spot the distant faint sources picked up by Chandra and Hubble. The team now have a set of galaxies reaching 13 billion light years.

The team calculate that at least a third of all big galaxies have contained a supermassive black hole emitting at least 10 billion times as much power as the sun, typically for as much as a billion years.

No more babies

Such violence releases enough energy to heat up galactic gas and even blast it right out of the galaxy. Stars are only born in clouds of cool gas, so you end up with a galaxy that is effectively dead, dominated by old red stars instead of the bright blue young stars that grace a "living" spiral galaxy like the Milky Way.

The black holes could be responsible for many of the dead, red galaxies seen in the universe. Most red galaxies are thought to be created when galaxies collide, which could help to feed the central black holes of the merging galaxies, switching on their deadly X-ray emissions. Violent black holes could also explain the existence of isolated lens-shaped galaxies, which do not seem to have suffered major collisions.

Still, it remains unknown how much power the black hole actually channels into galactic gas, says team member Asa Bluck of the University of Nottingham, UK. "So we have not proved that this is the dominant galaxy killer," he told New Scientist. "But it is now the prime suspect in most cases of galaxy death."

First blood to Atlas at the Large Hadron Collider

IT'S first blood to the massive Atlas detector at the Large Hadron Collider. Just days after the physics programme started, Atlas has reported its first detection of W boson particles.

W bosons have been seen at other colliders, but before any of the detectors at the LHC can attempt to discover new particles they must "rediscover" established ones. It is "an excellent sign" to spot the particles so soon, says Fabiola Gianotti, who heads the Atlas team. "It demonstrates that both the LHC accelerator and Atlas work extremely well."

W bosons decay almost instantly into leptons and neutrinos. On two occasions since the machine began collisions at 7 teraelectronvolts last month, the leptons - either a positron or a muon - have been detected in Atlas's calorimeter and muon chambers. Neutrinos do not interact with the detector, but their presence was inferred from the imbalance of the decay's total momentum - its "missing energy" (see diagram).

The detection is interesting in its own right as new particles, such as the Higgs boson, have been predicted to decay into W bosons, says Atlas physicist Andreas Hoecker. "W bosons are really very central."

David Barney, a member of the rival CMS detector collaboration, points to an "element of healthy competition between the two big general-purpose detectors". He says that many interesting events will be needed from both detectors to produce a complete picture.

Black hole twins spew gravitational waves

Astronomers could be on the cusp of detecting gravitational waves after four decades of trying, according to a team of Polish astrophysicists. They say that if current gravitational-wave detectors are upgraded to search for binary black-hole systems, gravitational waves would be expected "within the first year of operation". If correct, it would open up a new window to the cosmos, allowing astronomers to see the universe with fresh eyes.

Unlike waves of light which travel through space, gravitational waves are ripples in the fabric of space–time itself. Sources of these waves, which were predicted by Einstein's theory of general relativity, include binary systems of compact objects such as neutron stars and black holes. As one of the duo inspirals toward the other, gravitational waves propagate out into space.

Searches for gravitational waves, such as the Laser Interferometer Gravitational-Wave Observatory (LIGO), have concentrated on binary systems of two neutron stars because they were thought to be more numerous, despite being weaker sources than rarer double black hole systems.

Wrong decision

However, a team of researchers, led by Chris Belczynski of the Los Alamos National Laboratory, report that these projects have taken the wrong option, saying that double black hole systems may be far more common than previously thought. The reason is related to stars' metallicity, which is the fraction of elements that are heavier than helium. The lower the metallicity the less mass is lost at the end of the star's life and therefore the black holes that form are more likely to survive to become a black hole binary.

Until now, models have assumed that most stars had a similar metallicity to the Sun. But by analysing data from the Sloan Digital Sky Survey, Belczynski and his team found that this is only true for 50% of stars, while the rest have a significantly lower metallicity, at 20% of the Sun's.

The finding is particularly significant given the sensitivity of black hole binary formation to changes in metallicity. "If you reduce metallicity by a factor of ten then you increase the number of black hole binaries by a hundred or a few hundred times," says Tomasz Bulik, one of the researchers at the Nicolaus Copernicus Astronomical Center in Warsaw.

Imminent upgrades

The current generation of experiments that are searching for gravitational waves, such as LIGO and fellow detector VIRGO, fall short of the sensitivity that Belczynski's team predicts is required. However, ten-fold upgrades for both are imminent. "The upgrades mean that we are looking at reaching sensitivities at which this paper suggests we are guaranteed to see something," explained Stuart Reid, a gravitational wave researcher at the University of Glasgow, who is not involved in this research.

Intermediate upgrades to VIRGO could be online as early as this autumn, bringing the instrument to the edge of the range of sensitivities predicted by Belczynski. Both detectors are due to be fully upgraded by 2015. Should they find gravitational waves it would open up new possibilities for probing the cosmos, allowing astronomers to become stellar cartographers.

"As a neutron star inspirals into a black hole, gravitational waves are emitted, mapping out the space–time curvature formed by the black hole. Measuring those waves tells us how the black hole affects objects around it," says Reid. Gravitational wave astronomy also has advantages over electromagnetic radiation. "It's difficult to account for how light is affected as it travels towards you. Gravitational waves' interaction with matter is very weak, so you don't get the same distortion," he added.

Monday, 12 April 2010

Strange quark weighs in

A collaboration of particle physicists in Europe and North America have calculated the mass of strange quarks to an accuracy of better than 2%, beating previous results by a factor of 10. The result will help experimentalists to scrutinize the Standard Model of particle physics at accelerators such as CERN's Large Hadron Collider and Fermilab's Tevatron.

Quarks are elementary particles possessing familiar properties such as mass and charge, but they never exist as free particles. Instead they join together by the strong force into bound states called hadrons, which include the proton and the neutron. Theorists predict that a large portion of the hadron mass is accounted for by the strong force, mediated by particles known as gluons, and the exact nature of these interactions are still poorly understood.

Quark colour

To determine the mass of individual quarks, therefore, theorists have to combine experimental measurements of hadrons with calculations based on quantum chromodynamics (QCD) – the theory of the strong force. Refinements to this theory over the years have enabled experimentalists to calculate the mass of the heavier three quarks – the top, bottom and charm – to an accuracy of 1%. Unfortunately, however, it is has been much harder to make accurate predictions for the mass of the three lighter quarks – the up, down and strange – and reference tables still contain errors of up to 30%.

Christine Davies at the University of Glasgow and her colleagues in the High Precision QCD collaboration have now finally produced an accurate figure for the mass of the strange quark by taking a different, mathematical approach. They have used a technique known as "lattice QCD", where quarks are defined as the sites of a lattice and their interaction via gluons represented on the connecting links.

Lattice QCD, which requires the use of powerful supercomputers, enabled the researchers to measure the ratio of the charm quark to the strange quark to an accuracy of 1%. Since the mass of the charm quark is well defined, Davies calculates that the strange quark has a mass of 92.4 MeV/c2 plus or minus 2.5 MeV/c2.

Precision programme

This result is part of a programme of precision calculations in lattice QCD that will help experimentalists at accelerators like the LHC to make sense of the collisions they observe. They are of particular interest to researchers at the LHCb experiment who, by studying mesons made of bottom quarks, are trying to understand whether current physics can describe how our universe developed,

Indeed, many particle physicists believe that once the LHC is ramped up to 14 TeV it will be in a position to either confirm or destroy the Standard Model of particle physics. "This is all part of pinning down the Standard Model and asking how nature can tell the difference between matter and antimatter," says Christine Davies. In the short term, the High Precision QCD team intends to develop its research by using the same method for bottom quarks, to get accurate results for its mass and the decay rates of its hadrons needed by LHCb.

David Evans, a researcher at the University of Birmingham and a member of the ALICE experiment at CERN, says that it is important to know quark masses for the pursuit of new physics. "If you want to predict new particles in higher energy states, it is very important to know the mass of its constituent parts," he says. "As far as I know, this is the only group to pin down the mass of light quarks to such high accuracy".